..and other such nonsense.

This report led Owen Paterson convinced that the NHS is failing and Something Must Be Done.

I think this report, or reports of this report, will be used to demonstrate why the NHS Must Be Privatised by some politicians and newspapers, and because of the coverage it has had I think it’s worth assessing.

Brief evidence critique:

page 24 (this is really the start of the report. There is a lot of preamble.)

The charge is that the UK has comparatively high-ish amounts of ‘amenable mortality’. This isn’t a term used much in mainline UK journals: it rests on big data jumping to highly contentious conclusions. The claim is that amenable mortality is a good indicator of how well a health service is performing. It is referenced to Eurostat data . This metadata is drawn from death certificates. We know these are often unreliable and this is an international phenomena likely to have cultural elements. Amenable deaths (i.e. deaths that could have been avoided) include, in people under 75, deaths due to hepatitis, HIV, bowel/skin/breast/cervical/bladder/thyroid cancer, epilepsy, diabetes, pneumonia, hypertension, heart disease..)

This is plainly silly: no health service can pretend to be solely responsible for the rate of incidence or survival of people with critical conditions. There is no medicine that could promise a cure for any of these (with the exception of hypertension, which is usually a risk factor, not a disease.) (and even then,I am not aware of any intervention with a NNT of exactly 1, even parachutes. ) Instead, it would have been far better to look at Marmots’ work on poverty and health and ask how much unfair societies contribute to earlier deaths.

page 26

Small numbers means more unreliability : a fact which is mentioned. Yet the claim is that in 2009 Belgium, Italy, Luxembourg, France, Spain and Switzerland have zero “avoidable deaths per 1,000 cases due to complications of pregnancy, childbirth and the puerperium.” Hmm. Except that in Italy, researchers found that only 37% of all maternal deaths were included in official data in 2011; deaths occurred in Switzerland as they did in France, where audit conclusions were that some deaths- including in 2009 – were potentially avoidable (impossible to prove, but it does show that the data being presented in terms of no avoidable maternal deaths is wrong.)

Page 27.

General point. The language is quite strange for a supposedly independent report (we will come on to this later.) The UK is ‘unusually’ ahead. Austria is ‘exceptionally bad’. I was taught, when writing academic reports, to describe data with caveats, and make conclusions in a separate discussion so that it was clear where facts and opinion separated.

page 30.

More screening, for example for breast cancer, means that more ‘cancers’ are detected of the type that would never do harm. This in turn means that it looks like a country is doing very well for cancer care, but no benefit is realised for patients. The country that does more screening will look better because fewer people die of cancer – but these were never cancers that were going to kill.

However there are other, larger, concerns about how to interpret big data like this. The Eurocare data, for example: “The interpretation of complex multinational data probably needs to be more subtle. For example, survival after a diagnosis of cancer correlates clearly with the general health of a country’s citizens (figure). If—because of multiple morbidities not directly related to cancer—patients with cancer are unfit for surgery, radiotherapy, or chemotherapy, then no amount of investment in cancer services will improve outcomes. Poor cancer survival can be a result of injustices embedded deeply within an unhealthy society.”

Small differences in outcomes multiplied up to extrapolate to a large population are very susceptible to magnifying uncertainty and presenting it as fact.

page 35

There are conclusions such as “If the UK’s lung cancer patients had been treated in Iceland or Australia, where survival rates are about five percentage points higher, an additional 2,400 lives a year could have been saved.”

it should be

“if the UK was identical in population, personal and environmental risk factors, social advantage, wealth, health equity, genetics, and healthcare system, then some deaths could have been delayed.”

page 47

Now we are on to stroke. The data on stroke is unlikely to be reliable for several reasons. One is coding accuracy. Another is that overdiagnosis is possible through the large variation in amount of use of CT/MRI in picking up old strokes which aren’t going to impact on mortality outcomes.

page 52

“We therefore cannot tell by how much an elderly patient suffering from pneumonia or obstructive pulmonary disease would increase their survival chances by seeking treatment elsewhere. But it is safe to say that the NHS is not delivering on this measure, not least because external conditions are actually favourable for the NHS.”

I don’t think this is correct. External conditions are not just about smoking (and the hazard of smoking related death now does not correlate with current smoking but past smoking rates) but other factors such as environmental pollution and, again, health inequalities.

page 58

For some reason the author has chosen a 3 hour wait in A+E as a target and declares ‘red’ to be more than 3 hours. This isn’t helpful. Some things can safely wait a day, other things cannot wait two minutes. In the UK the target (which has itself created many problems) is a 4 hour wait in A+E. Bizarrely, the report says that seeing your GP on the same day you request an appointment is the exception rather than the norm. This isn’t a good measure of quality – in fact, continuity of care is a better measure – and GPs care more for people with chronic conditions than acute conditions, and have an obligation to see people in need of urgent care urgently. It is cited to this report, which I am tempted to diss on account of how many exclaimation marks there are! but I will not! Instead I will merely note that access is not the same as quality: the UK absorbs urgent needs every day because staff go beyond the letter of their contract to do so.

page 64

After telling us that take up of new drugs or procedures is not necessarily a good thing (as costs can easily outweigh clinical usefulness) the author concludes

“Drug consumption is an input, not an outcome. We cannot judge what market penetration rate would be ‘ideal’, or which country has got the balance ‘right’. But suffice it to note that the NHS could not, by international standards, be described as a great facilitator of medical innovation.”

Another way to phrase this is

The NHS mainly does a good job of assessing the claims of companies about their products before agreeing to fund them. By doing this is protects people against innovations that add little to their care or waste resources, meaning that money is kept for to spend on other useful interventions.

*continued/*page 64

In fact, the citation this part of the document relies on states “The causes of international variations in drug usage appear to be complex, with no single consistent cause being identified across disease areas and drug categories. However, in assessing and explaining the potential causes, a number of common themes emerge: Differences in health spending and systems do not appear to be strong determinants of usage.”

page 65

spending doesn’t always equal volume of testing; it depends on the cost of the tests comparatively.

The conclusions – that we are under testing patients – ” a false economy” as the author says, because “given the NHS ‘s poor results on so many outcome measures…” But this is an unsafe conclusion, and it means that the caveats that have been placed before about the uncertainties of this data are being minimised.

Page 69

I present another argument: that the NHS is relatively underfunded for what it is trying to achieve (dealing well with multi morbidity; social inequality; and the austerity cuts to social care) but also that it continues to spend money on the wrong things. It would be quite easy to spend half the GDP on healthcare and make no difference to quality or quantity of life, through spending on more re disorganisations, non evidence based wasteful policies, or policies that lead to harmful overtreatment and overdiagnosis.

Page 72

A comparison of ‘potential gains in life expectancy’ through ‘poor efficiency improvements’ . The conclusion of the author is that ‘we have no reason to believe that the NHS’s performance would match that of it’s Western European neighbours if it matched its spending level’.

It is cited to this report about the relative efficiencies of different countries healthcare systems. It’s immensely complex, relying on big data, and of course the UK has been told to make 22 billion worth of efficiency savings. As John Appleby says, the hospitals have already switched to lower energy light bulbs. Cost savings are now coming in the form of waiting lists and more unsafe staffing. We should be examining non evidence based policy making for savings, see comments above, but we are not.

Page 73)

factual inaccuracy. Contrary to what is written, it is allowable to pay for some private treatment and then revert to the NHS. I have had recent confirmation of this from government. I think this is morally wrong, because it allows private companies to do non evidence based tests and then let the NHS sort out the mess. It is a cause of NHS inefficiency, and if the author was concerned about cost saving for the NHS, it would make sense to ensure that the NHS did not take on the burden of fixing the private sectors’ false positives after it has profited and ran away.

Page 93

After having put in various caveats about the data reliability/comparability, the conclusion is that the NHS is ‘lagging behind’ on measures where ‘robust data is available’. I think there is a lot to be said for examining different healthcare systems. But the focus on comparing data to the point of deciding how many deaths could be delayed if we had the health system of another country is misguided. It isn’t about a different health system, it’s about a different population, culture and set of risk factors and behaviours as well. Big data can be very useful but the focus first of all must be on understanding its’ uncertainties and limitations rather than making unsafe conclusions.

page 95

The characterisation of ‘NHS defenders’ who criticise the data being presented is unhelpful. Bias is a large problem in research and medicine, and it is always helpful to be clear about who is funding studies, why, and what they may have to gain from them. I have always been clear that I am a passionate supporter of the NHS. It should reduce inequalities, by protecting people from under treatment and overtreatment; and it is an endeavour which should consolidate and exemplify the best of human communities. Years ago I made a statement (on the ‘welcome’ part of blog) which publicly declared this; my conflicts of interest are there (and on whopaysthisdoctor.org) I am a GP contracted to the NHS. This potential bias does not preclude me from examining the data and challenging the incorrect assertions in this document. Nor should it preclude the Rt Hon Owen Paterson, who is a consultant to the diagnostics company sponsoring the paper, written by a think tank established by him, from writing its foreword.

The claim is made that the fact that France includes only a small proportion of people it’s cancer registry (compared to nearly all patients in the UK) is only a problem ‘if we had reasons to believe the French registry is not a random sample’ – well – it clearly isn’t a random sample as you would expect in a clinical trial, and there has been much work done on describing its’ inaccuracies.

The argument is then made that complexity doesn’t matter as long as the data is comparable. Imperfect data can still be useful- there are few, if any, perfect clinical trials – but imperfect data shouldn’t be used to stretch to unsafe conclusions.

I look at big data a lot in my day job (referrals, admissions, operations, prescribing). We spend a lot of time discussing it. Most of that time is spent trying to explain it and see if we should act on it. Some of this time is likely wasted. Looking at international data can be interesting, but the question being asked of it is critical. Can the NHS learn from looking to see what works, and what doesn’t work, elsewhere? Almost certainly. Can this data be accepted as showing the NHS outcomes are ‘amongst the worst’ and ‘vastly more robust than its critics acknowledge’? I don’t think so. I would be far more interested to look at more detailed data that is capable of examining on-the-ground differences and similarities between countries.

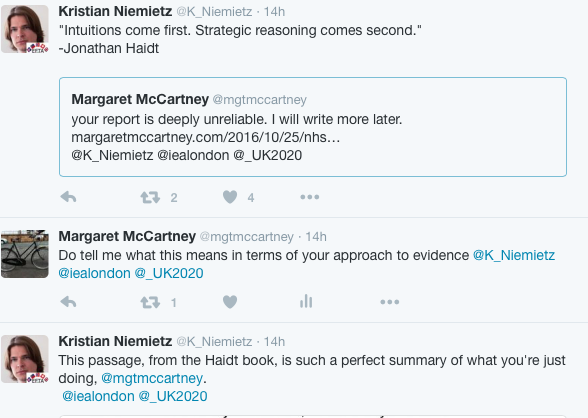

I had an interesting reaction on twitter from the author to this half written blog of earlier .

He tweeted

I think this means that as a supporter of the NHS, pointing out flaws/errors in the data can be dismissed on the basis that I am a supporter of the ethos and values of the NHS.

It would be far better to debate the data, and correct the errors in the report.

Caveats matter. Medicine has a long history of reaching unsafe conclusions by using unreliable data and doing very active harm because of it.

Update, 27/10/16

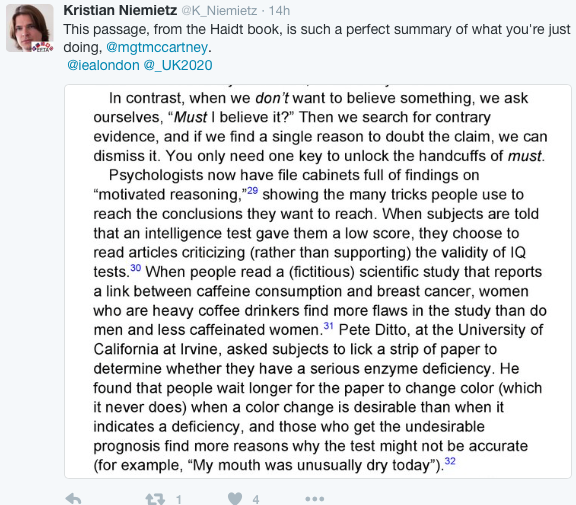

After being called a ‘commie doctor’ or that this blog is ‘pathetic pedantry’ on Twitter by Dr Niemeitz, it is tempting not to engage any more: name calling/ad hom attacks are boring, hurtful, waste time, and do not progress the argument (except sometimes to show that there is no argument on evidence being made.) The author of the report went on to produce this reply.

https://iea.org.uk/the-uk-health-system-a-response-to-critics/

It does not adequately address the criticisms I’ve made.

First, bias. It is entirely true that I support the NHS and possible that my bias makes me conclude differently to what the evidence suggests. I have always been open about this. I have never been a member of a political party. I have made a public DOI of my potential competing interests on my blog and on whopaysthisdoctor.org so that it is public.

Critically, however, bias affects everyone. It may be useful to have a potential competing interest statement from the author.

Second, the charge that the NHS is falling behind other European countries in terms of quality of healthcare. It may well be, because it is certainly falling behind itself: waiting lists are up, GP services are increasingly stretched, social care is being cut, benefits sanctions cause the most appalling distress that stretched mental health services are increasingly unable to deal with. We don’t need European data to tell us this, however. We just need to look at the evidence already available within the UK.

The problem with the report is that although it has – indeed – placed some caveats about the reliability of comparable data, it still draws definitive conclusions about what might be achieved if we were more like (another country) . For example, I have shown how almost all UK patients are included in cancer registries whereas in other countries the figures may be very low; this reflects non random entry into registers and produces bias. It is also untrue to state that the level of error in (I think ) mortality data in different countries is equal and will therefore cancel out bias: we do not know this is the case.

Nor has the author commented on the frank errors in his assertions about mixing private and NHS health care, or the assertion that there were no potential avoidable maternal deaths in several European countries.

Point of order: this wasn’t ‘everything I could throw at it’ – it was all I could do in the time I had available. I would suggest formal peer review in an academic journal if the author would like more thorough advice on how to improve it. It’s true that I included the word ‘privatised’ in the title of this blog despite it not being included in his report – because I was pretty sure that was what the author of the report would believe, and indeed, in his reply he has confirmed that.

Dr Niemietz says that he is not sure what my conclusions are. I’ve written above: it’s often very useful to look at big data if you are keen to compare and understand what the differences are. It’s hazardous to predicate policy on interpreting datasets which come with large margins of error. More detailed comparative data may be useful to try and outline and explain similarities and differences and this may lead to interventions capable of improving care. I don’t think the data is useless, at all. I do think it is being used wrongly. Had his report not led to large amounts of media coverage, with another report being promised, I wouldn’t have looked it up. But it did, and it has been used by e.g. Karol Sikora to say “we should not continue endlessly with a system of healthcare which we know costs lives” (worth noting that Sikora, on the editorial board of this report, his ihealthltd.com clinic has all sorts of non evidence based health checks – exactly the kind of problem of overdiagnosis and treatment that happens so often in the private health sector). In the end, the European data is a red (?blue) herring. The NHS is struggling, and this is not simply an issue of underfunding. It is of what we choose to spend the money on, and how we decide that process. We have wasted millions in non evidence based, wasteful, short term, political vanity projects. Another costly, non evidenced reorganisation is the very last thing it needs.

Updated, updated, update…

in response to stuff on twitter

- as well as looking at bias (awaiting DOI statements!) it would be useful to consider association versus causation. European differences in mortality rates for various conditions can’t demonstrate whether or not the health service or – as the Eurocare data puts it ‘different diagnostic intensity and screening approaches, and differences in cancer biology. Variations in socioeconomic, lifestyle and general health between populations might also have a role’.

- so fig A in the appendix of the report – axis wrongly labelled – individual countries not labelled – it doesn’t take account of screening differences across Europe and it’s mortality corrected for age that is the more useful data (more breast screening will pick up more cancers that don’t behave aggressively, meaning that data will be skewed in favour of countries that do more screening)

- the big concern is that coding is that it’s non -random: this means that conclusions come with high risk of bias in favour of the coding ( staff with time to do coding, better funding, etc)

- fig 4, page 26; author accepts that the data showing that several countries have no potentially amenable maternal mortality is incorrect; I suggested that this brought the reliability of the dataset into question and was told by the author it did not. I would like to see it in full but there doesn’t appear to be a full citation, I will ask for it.

- again, an exercise in data comparability is often useful but problems occur when extrapolation occurs which is unsafe, or when the data is treated as more reliable than it is, or when association is confused with causation.

- The NHS is underfunded and is wasting money on non evidence based policy. I’d prefer to look at this data and use it to spend money better on the NHS to ensure that more people get better care. This data is more reliable (eg healthchecks, dementia screening, advance care planning) because it has been subject to trials.

- On twitter I was told that it wasn’t a big error to have made mistakes in the report about the mixing of private and NHS care. Yet it happens all the time. People are seen for a private appointment and then use NHS care for the same problem. Or are seen for a 3K health check in a luxe clinic and have, inevitably, 20 different false positives which they are sent into the NHS to deal with. Both create problems for the NHS and I don’t think it should be allowed, but it is.

5 Responses to “NHS is failing and must obviously be privatised”